Wednesday, 16 November 2011

What is Bot Net?

Computers that are coopted to serve in a zombie army are often those whose owners fail to provide effective firewalls and other safeguards. An increasing number of home users have high speed connections for computers that may be inadequately protected. A zombie or bot is often created through an Internet port that has been left open and through which a small Trojan horse program can be left for future activation. At a certain time, the zombie army "controller" can unleash the effects of the army by sending a single command, possibly from an Internet Relay Channel (IRC) site.

The computers that form a botnet can be programmed to redirect transmissions to a specific computer, such as a Web site that can be closed down by having to handle too much traffic - a distributed denial-of-service (DDoS) attack - or, in the case of spam distribution, to many computers. The motivation for a zombie master who creates a DDoS attack may be to cripple a competitor. The motivation for a zombie master sending spam is in the money to be made. Both of them rely on unprotected computers that can be turned into zombies.

According to the Symantec Internet Security Threat Report, through the first six months of 2006, there were 4,696,903 active botnet computers.

Credits to:

http://www.readwriteweb.com/archives/is_your_pc_part_of_a_botnet.php

http://searchsecurity.techtarget.com/definition/botnet

Friday, 11 November 2011

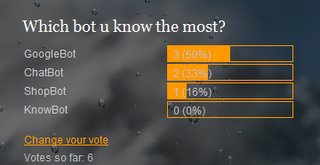

Seven Poll Result Analysis

This picture below is previous week poll analysis. We are discussed the question of which bot does your know more about it. Most of the voters voted "Googlebot".

Thursday, 10 November 2011

Computer game bot

In Multi-User Domain games (MUDs), players may utilize bots to perform laborious tasks for them, sometimes even the bulk of the gameplay. While a prohibited practice in most MUDs, there is an incentive for the player to save his/her time while the bot accumulates resources, such as experience, for the player character.

Aim Bot

An aimbot (sometimes called "auto-aim") is a type of computer game bot used in first-person shooter games to provide varying levels of target acquisition assistance to the player. It is sometimes incorporated as a feature of a game (where it is usually called "auto-aim" or "aiming assist"). However, making the aim-bot more powerful in multiplayer games is considered cheating, as it gives the user an advantage over unaided players.

Aimbots have varying levels of effectiveness. Some aimbots can do all of the aiming and shooting, requiring the user to move into a position where the opponents are visible; this level of automation usually makes it difficult to hide an aimbot—for example, the player might make inhumanly fast turns that always end with his or her crosshairs targeting an opponent's head. Numerous anti-cheat mechanisms have been employed by companies such as Valve to prevent their use and avoid the accusations.

Some games have "auto-aim" as an option in the game. This is not the same as an aimbot; it simply helps the user to aim when playing offline against computer opponents usually by slowing the movement of 'looking/aiming' while the crosshair is on or near a target. It is common for console FPS games to have this feature to compensate for the lack of precision in analog-stick control pads.

Credits to:

http://gamebots.sourceforge.net/

http://mmohuts.com/review/bots

IRC BOT

Tuesday, 8 November 2011

Knowbots

A kind of bot that collects information by automatically gathering certain specified information from websites. A knowbot is more frequently called an intelligent agent or simply an agent. A knowbot should not be confused with a search engine crawler or spider. A crawler or spider progam visits Web sites and gathers information according to some generalized criteria and this information is then indexed so that it can be used for searching by many individual users. A knowbot works with specific and easily changed criteria that conform to or anticipate the needs of the user or users. Its results are then organized for presentation but not necessarily for searching. An example would be a knowbot (sometimes also called a newsbot) that visited major news-oriented Web sites each morning and provided a digest of stories (or links to them) for a personalized news page.

A kind of bot that collects information by automatically gathering certain specified information from websites. A knowbot is more frequently called an intelligent agent or simply an agent. A knowbot should not be confused with a search engine crawler or spider. A crawler or spider progam visits Web sites and gathers information according to some generalized criteria and this information is then indexed so that it can be used for searching by many individual users. A knowbot works with specific and easily changed criteria that conform to or anticipate the needs of the user or users. Its results are then organized for presentation but not necessarily for searching. An example would be a knowbot (sometimes also called a newsbot) that visited major news-oriented Web sites each morning and provided a digest of stories (or links to them) for a personalized news page.Friday, 4 November 2011

New ZeuS bot could be antivirus-proof

Trend Micro said the variant, detected as TSPY_ZBOT.IMQU, uses a new encryption-decryption algorithm and makes it harder for anti-virus programs to clean its infection.

“If a machine is infected with ZeuS, calling (API GetFileAttributesExW) via a specific parameter would return with the bot information, which includes bot name, bot version, and a pointer to a function that will uninstall the bot. Antivirus software may utilize this function to identify ZeuS bot information and to clean ZeuS infection automatically. However, the new version of ZeuS also updated this functionality and removed the pointer to the bot uninstall function, thus, eliminating the opportunities for AVs to utilize this function," it said in a blog post.

Also, it said this new version showed current trackers may fail to decrypt its configuration file due to its updated encryption/decryption routine.

The new variant does not use RC4 encryption algorithm but an updated encryption/decryption algorithm instead, Trend Micro added.

We believe this is a private version of a modified ZeuS and is created by a private professional gang comparable to LICAT. Though we have yet to see someone sell this new version of toolkit on underground forums, we expect that we will see more similar variants which will emerge in the not-so-distant future,it said.

Trend Micro said the new malware targets a wide selection of financial firms including those in the United States, Spain, Brazil, Germany, Belgium, France, Italy, and Ireland.

More interestingly, it targets HSBC Hong Kong, which suggests that this new Zeus variant may be used in a global campaign, which may already include Asian countries, it said again.

It added the emergence of these latest ZeuS variants implies ZeuS is still a very profitable piece of malware and that cybercriminals are continuously investing on the leaked source code.

Credits to:

http://www.gmanews.tv/story/231604/technology/new-zeus-bot-could-be-antivirus-proof

Thursday, 3 November 2011

Sixth Poll Result Analysis

Tuesday, 1 November 2011

Yahoo Bot

The Yahoo! Search engines like Yahoo! crawl through websites to gather information, returning a list of relevant websites to the user who types search terms into them. Bots return up-to-date information on currently active websites but have been known to return defunct websites.

Yahoo! Slurp

Yahoo! Slurp is the recognized name of the bot that the Yahoo! search engine uses. It's a different bot than Googlebot for Google and Bingbot for Bing. Yahoo! Slurp is based on Web crawler architecture developed for Inktomi. The Yahoo! Slurp bot crawls through websites and creates a virtual copy of them to be used later for the search engine.

Bot Tasks

The Yahoo! Slurp bot performs three basic actions for the Yahoo! search engine. First, it retrieves Web server website information. Then the bot analyzes the website content for relevant information. Finally, it files the information to be used in site indexing. The bot itself is little more than an automated script performing these three tasks over and over. However, it can do it at incredibly fast speeds. A Yahoo! search engine results page takes mere seconds to load. It wouldn't be possible without the bot.

Site Indexing

The process of ordering the websites that the Yahoo! Slurp bot has found is called site indexing. Websites are ranked according to their relevancy to search terms and their individual ranks within the Yahoo! search engine. The more popular and integral to the Internet the site is, the more rank it obtains and the higher it is when Yahoo! returns its search results. The Yahoo! Slurp bot helps index the sites for Yahoo!

How the Bot Searches

The Yahoo! Slurp bot uses four parameters when accomplishing its website information retrieval process. It operates using a selection policy that determines which Web pages to download; it uses a re-visit policy that determines when the pages are checked for updated information. The bot also operates by a politeness policy that prevents certain websites from being overloaded with searching. It also operates by a parallelization policy, which coordinates the Yahoo! Slurp bot with other Web crawlers.

Monday, 31 October 2011

Chat Bot

Friday, 28 October 2011

Web Bot Contest Answer is Released!!

Thursday, 27 October 2011

Fifth Poll result Analysis

The title of the poll last week is “Do you guys know what is Malware Bots?” We had created a pie for votes results.

The above figure showed that there are only total 2 votes. 1 person voted “Yes” and the other voted “I don’t know what is that.”

Conclusion, according to the chart above, only 1 person voted that he know about Malware Bots. Which means that there is people know what is Malware Bots. Lastly, we will continue to improve our blog and share more interesting information to the readers.

Web Scraping & techniques

Techniques for Web scraping

Web scraping is the process of automatically collecting Web information. Web scraping is a field with active developments sharing a common goal with the semantic web vision, an ambitious initiative that still requires breakthroughs in text processing, semantic understanding, artificial intelligence and human-computer interactions. Web scraping, instead, favors practical solutions based on existing technologies that are often entirely ad hoc. Therefore, there are different levels of automation that existing Web-scraping technologies can provide:

1.) Human copy-and-paste: Sometimes even the best Web-scraping technology cannot replace a human’s manual examination and copy-and-paste, and sometimes this may be the only workable solution when the websites for scraping explicitly set up barriers to prevent machine automation.

2.) Text grepping and regular expression matching: A simple yet powerful approach to extract information from Web pages can be based on the UNIX grep command or regular expression matching facilities of programming languages (for instance Perl or Python).

3.) HTTP programming: Static and dynamic Web pages can be retrieved by posting HTTP requests to the remote Web server using socket programming.

4.) DOM parsing: By embedding a full-fledged Web browser, such as the Internet Explorer or the Mozilla Web browser control, programs can retrieve the dynamic contents generated by client side scripts. These Web browser controls also parse Web pages into a DOM tree, based on which programs can retrieve parts of the Web pages.

5.) HTML parsers: Some semi-structured data query languages, such as XQuery and the HTQL, can be used to parse HTML pages and to retrieve and transform Web content.

6.) Web-scraping software: There are many Web-scraping software tools available that can be used to customize Web-scraping solutions. These software may attempt to automatically recognize the data structure of a page or provide a Web recording interface that removes the necessity to manually write Web-scraping code, or some scripting functions that can be used to extract and transform Web content, and database interfaces that can store the scraped data in local databases.

7.) Vertical aggregation platforms: There are several companies that have developed vertical specific harvesting platforms. These platforms create and monitor a multitude of “bots” for specific verticals with no man-in-the-loop, and no work related to a specific target site. The preparation involves establishing the knowledge base for the entire vertical and then the platform creates the bots automatically. The platforms robustness is measured by the quality of the information it retrieves (usually number of fields) and its scalability (how quick it can scale up to hundreds or thousands of sites). This scalability is mostly used to target the Long Tail of sites that common aggregators find complicated or too labor intensive to harvest content from.

8.) Semantic annotation recognizing: The Web pages may embrace metadata or semantic markups/annotations which can be made use of to locate specific data snippets. If the annotations are embedded in the pages, as Microformat does, this technique can be viewed as a special case of DOM parsing. In another case, the annotations, organized into a semantic layer, are stored and managed separately from the Web pages, so the Web scrapers can retrieve data schema and instructions from this layer before scraping the pages.

The above example are the techniques of Web scraping.

http://www.mozenda.com/web-scraping

SenseBot

Clever Bot

Wednesday, 19 October 2011

Web Bot-Webscraper

There is some really great webscraper software now on the market. Webscraper software can be an invaluable tool in the building of a new business and in any endeavor requiring extensive research. The new generation of programs incorporates an easy to use GUI with well-defined options and features. It has been estimated that what normally takes 20 man-days can now be performed by these programs in only 3 hours. With a reduction in manpower, costs are trimmed and project timelines are moved up. The webscraper programs also eliminate human error that is so common in such a repetitive task. In the long run, the hundreds of thousands of dollars saved are worth the initial investment.

Video of a screen scraper software, Competitor Data Harvest:

http://www.youtube.com/watch?v=uU91vOsS6xc&feature=player_embedded

Extract Data from the website

Data extraction from a site can be done with extraction software and it is very easy. What does data extraction mean? This is the process of pulling information from a specific internet source, assembling it and then parsing that information so that it can effectively be presented in a useful form. With a good data extracting tool the process will take a short time and very easily. Anyone can do this not to mention the simplicity it comes in when looking to extract and store data to publish it elsewhere. The software to extract data from the website is being used by numerous people, with amazing results. Information of all types can be readily harvested.

Free Data Mining Software

There is now a lot of free data mining software available for download on the internet. This software automates the laborious task of web research. Instead of using the search engines and click click clicking through the pages tiring and straining the eyes then using the archaic copy and paste into a second application, it can all be set to run as we relax and watch television. Data mining used to be an expensive proposition that only the biggest of businesses could afford but now there is free data mining software that individuals with basic needs can use to satisfaction. Many people swear by the free programs and have found no need to go further.

Create data feeds

Creating data feeds especially the RSS is the process of distributing the web content in an online form for easy distribution of information.RSS has enabled the distribution of content from the internet globally. Any type of information located in the web can be created into a data feed whether it is for a blog or a news release. The best thing about using a web scraper for these purposes is to ensure that your information is easily captured, formatted and syndicated. Cartoonists and writers in the newspaper business create data feeds for their work to be disseminated to readers. This process which has enhanced the sharing of information to many people has been made possible.

Deep Web Searching

The deep web is the part of the Internet that you can not see. It can not be found by traditional search engines and bots to find all the data and information. Deep web searching needs to be done by programs that know specifically how to find this information and extract it. Information found in the deep web are pdf, word, excel, and power point documents that are used to create a web page. Having access to this information can be very valuable to business owners and law enforcement, since much of it is all information that the rest of the public can not access.

Credits to:

http://www.mozenda.com/web-bot

Tuesday, 18 October 2011

Crossword puzzle

Good Luck!!!

Across

- A search engine spider

- other name of web crawler

- retrieves information from websites

- A software application that does repetitive and automated tasks in the Internet

- Send spam emails and typically work by automated

- using the Inktomi database

Down

- A special type bot that are use to check prices

- Other name of web crawler

- Crawling, Indexing and Serving is the key processes in delivering search result.

Like any program, a bot can be used for good or for evil. "Evil" web crawlers scan web pages for email addresses,

instant messaging handles and other identifying information that can be used for spamming purposes. Crawlers can also engage in

click fraud, or the process of visiting an advertising link for the purpose of driving up a pay-per-click advertiser's cost. Spambots send

hundreds of unsolicited emails and instant messages per second. Malware bots can even be programmed to play games and collect prizes from some websites.

Malware is type of bot which allows an attacker to gain complete control over

the affected computer.Computers that are infected with a 'bot' are generally referred to as 'zombies'.

There are literally tens of thousands of computers on the Internet which are infected with some type of 'bot' and don't even realize it.

Attackers are able to access and activate them to help execute DoS attacks against web sites, host phishing attack Web sites or send out thousands of spam email messages. Should anyone trace the attack back to its source, they will find an unwitting victim rather than the true attacker.

A dos can say as denial of service. malware bots that are used for injecting viruses or malware in websites, or scanning them looking for security vulnerabilities to exploit are most dangerous.

It’s no surprise that blogs and ecommerce sites that become target of these practices end up being hacked and injected with porn or Viagra links. All these undesirable bots tend to look for information that is normally off limits and in most situations, they completely disregard robots.txt commands.

Dectecting infection

The flood of communications in and out of your PC helps antimalware apps detect a known bot. "Sadly, the lack of antivirus alerts isn't an

indicator of a clean PC," says Nazario. "Antivirus software simply can't keep up with the number of threats. It's frustrating [that] we don't

have significantly better solutions for the average home user, more widely deployed."Even if your PC antivirus check comes out

clean, be vigilant. Microsoft provides a free Malicious Software Removal Tool. One version of the tool, available from both Microsoft

Update and Windows Update, is updated monthly; it runs in the background on the second Tuesday of each month and reports to Microsoft whenever it finds and removes an infection.

Reference

Amber Viescas, What are web bots[online], Retrieved in 25 july, 2001

URL:

http://www.ehow.com/info_8783848_bots.htmlg

Tony Bradley, What is a bot?[online]

URL:

http://netsecurity.about.com/od/frequentlyaskedquestions/qt/pr_bot.htm

Augusto Ellacuriaga, Practical Solutions for blocking spa, bot and scrapers, Retrieved in 25 june,2008

URL:

Review of HotBot

- HotBot (which is actually a Yahoo!/Inktomi database, and the version reviewed here)

- Ask Jeeves (the Teoma databases

- Advanced searching capabilities

- Quick check of three major databases

- Advanced search help

- Does not include all advanced features of each of the four databases

- No cached copies of pages

- Only displays a few hits from each domain with no access to the rest in Inktomi

Friday, 14 October 2011

IBM WebFountain

The project represents one of the first comprehensive attempts to catalog and interpret the unstructured data of the Web in a continuous fashion. To this end its supporting researchers at IBM have investigated new systems for the precise retrieval of subsets of the information on the Web, real-time trend analysis, and meta-level analysis of the available information of the Web.

Factiva, an information retrieval company owned by Dow Jones and Reuters, licensed WebFountain in September 2003, and has been building software which utilizes the WebFountain engine to gauge corporate reputation. Factiva reportedly offers yearly subscriptions to the service for $200,000. Factiva has since decided to explore other technologies, and has severed its relationship with WebFountain.

WebFountain is developed at IBM's Almaden research campus in the Bay Area of California.

IBM has developed software, called UIMA for Unstructured Information Management Architecture, that can be used for analysis of unstructured information. It can perhaps help perform trend analysis across documents, determine the theme and gist of documents, allow fuzzy searches on unstructured documents

http://www.redbooks.ibm.com/abstracts/redp3937.html

http://news.cnet.com/IBM-sets-out-to-make-sense-of-the-Web/2100-1032_3-5153627.html

Facts on the Spambot

Fourth Poll Analysis

Thursday, 13 October 2011

Shopbot

- Easy navigation

- Well-organized results

- Sorting by total cost (including shipping, handling, taxes, and restocking fees)

- Prices from a large number of retailers

Friday, 7 October 2011

Web Bot: 1.2 billion dead in BP oil spill, Nov. 2010 nuclear war. Accurate? Will ETs intervene?

Webbot Command Line Syntax

- References

- Henrik Frystyk Nielsen, Webbot Command Line Syntax, Retrived in 04/05/1999

- URL : http://www.w3.org/Robot/User/CommandLine.html

Thursday, 6 October 2011

ATTENTION! Coming soon contest

Hello everyone, we would like to inform you that we will be having a Crossword Puzzle contest coming soon. All of the puzzle question will related to our tittle which is "Web Bot".

Participants should follow the rules below:

| Name: | |

| IC Number | |

| Gender | |

| Email address | |

| State | |

| City | |

| Contact Number |

PRIZE

Internet Bots

An Internet Bot is a software application that does repetitive and automated tasks in the Internet that would otherwise take humans a long time to do. The most common Internet bots are the spider bots which are used for web server analyses and file data gathering. Bots are also used to provide the required higher response rate for some online services like online auctions and online gaming.

Web interface programs like instants messaging and Internet chat relay applications can also be used by Internet bots to provide automated responses to customers. These Internet bots can be used to give weather updates, currency exchange rates, sports results, telephone numbers, etc. Examples are Jabberwacky of Yahoo Messenger or SmarterChild by AOL instant messenger. Moreover, Bots may be used as censors in chat rooms and forums.

Today, bots are used in even more applications and are even available for home and business use. These new bots are based on a code called LAB code which uses the Artificial Intelligence Mark-up Language. Some sites like Lots-A-Bots and RunABot offer these types of services where one can send automated IMs, emails, replies, etc.

Third Poll Result Analysis

Friday, 30 September 2011

Googlebot

Saturday, 24 September 2011

Second poll result

Thursday, 22 September 2011

Briefly explain what is webbot by using podcast

我是刘伟盛,web bot 抓取关键字是和谷歌一样的方法,web bot会在固定的时间抓取新的网页搜索结果.Web bot 人造扫描信息是一个很重要的概念,因为它有一定的设定限制来预言,这个也解释了为什么它不可能去预测任何的世界预言. 为什么呢?因为web bot的主要目的是找资料,就像谷歌一样可以摘取重要的信息,所谓的重要信息就是如果要找任何资料,只要打到有相关的字,它都会找到有关字的句子. 这些信息都是放在一个很大的资料库和它最终目的是为了比较同样的议题然后看是否得到同样的结论.

我叫周子翔,Web bot检测相关的关键字.这网站包含的知识是人类写的,Web bot 摘取知识,找相关然后做出预测。Web bot不是自然的。Web bot收集,保持以及解释的知识是人类的脑做不到的。谷歌使用已经保存的资料来做很多东西然后给我们搜索的答案,可是他不能用来预测人类没有操控。我个人觉得Web bot在未来会有更好的发展机会,我要在提醒的是他是人造的,所以他也是有限制的能力。

我是Yao Weng Sheng, 在这里,我们必须要相信,因为web bot拥有一个我们人类没有的东西,那就是和拼整个网络上所有的信息,并试图寻找一个相关的权利,因此web bot可以去比人类更远。但是web bot做不到人类可以预测的东西。如果这是真的有可能预测令人难于自信的东西,谷歌将会把每一个问题的答案,它可以从网页中摘取相关的信息,但他有一个限制,谷歌使用它搜集的数据做了很多其他的东西,不是给你们的的搜寻结果,但如果他们试图控制,他们将无法预测人类做过的事情,这些事情都跟Web bot有关系,但是它比谷歌还要小. I am Liew Wee Sheng, Web bots simply crawl the web the same way Google crawls it at regular intervals to catch new and existing web sites and detect relevant keywords. This is the most important concept of web bots because it sets the limits to what the web bots are able to predict. This also explains why it’s impossible to predict any end of the world prophecy. Why is that? The main goal of a web bot is simply to crawl the web the same way Google would do it to extract important information from websites. That important information is usually the most relevant keywords on a website put with a certain algorithm that is able to get the meaning of the sentences the keywords are used in. This information is then put in a large database and the final goal is to compare similar topics to determine if they each point towards similar conclusions.

I am Chew Chu Chiang, The web contains information is written by humans, the web bots crawl that information, find correlations and make predictions. There is nothing super-natural in web bots. Gather ,keep and interpret information the human brain can’t. Google use the data to collect then do a lot of other stuff than giving you search results, but they wouldn't be able to predict things humans don’t have control over.I think that web bot will have a nice future but keep remind that web is man made and the bot crawl the web,there will have their own limit for the capacities.

I am Yao Weng Sheng, We have to be careful here, because web bots do have a power we don’t have: to merge all that information across the web and try to find a correlation. So, they can go a little further than what a single human can do, but they can’t go any further than what’s possible to predict by humans If it was really possible to predict incredible stuff, Google would have the answer to every question. Wait a minute…They do! Seriously, it’s possible to extract relevant information from the web but it has a limit. Google use the data it collect to do a lot of other stuff than giving you search results, but they wouldn’t be able to predict things humans don’t have control over if they tried. That’s the same thing for web bots, except it’s much more smaller than Google.

Friday, 16 September 2011

The TimeWave

The TimeWave is a mathematical program that purports to measure the ebb, flow and rate of novelty in our world. The TimeWave depicts increasingly greater magnitudes of novelty as we approach Dec. 21, 2012—the day the Mayan Long Count Calendar starts anew. The TimeWave was developed independently of Mayan Calendar knowledge.TimeWave theory is based on the mathematics of the ancient Chinese divinatory system known as the I Ching or book of changes. The famed ethnobotantist Terrence Mckenna is the person responsible for developing TimeWave theory. The TimeWave takes the form of a software program that generates a wave graph plotting a timeline over 4000 years in duration.The TimeWave can be mapped using 5 different number sets. Certain versions are said to be more ” mathematically sound” than others. They all end on the same date of Dec 21, 2012, but the wave patterns of peaks and valleys vary among the different versions.

The TimeWave is a mathematical program that purports to measure the ebb, flow and rate of novelty in our world. The TimeWave depicts increasingly greater magnitudes of novelty as we approach Dec. 21, 2012—the day the Mayan Long Count Calendar starts anew. The TimeWave was developed independently of Mayan Calendar knowledge.TimeWave theory is based on the mathematics of the ancient Chinese divinatory system known as the I Ching or book of changes. The famed ethnobotantist Terrence Mckenna is the person responsible for developing TimeWave theory. The TimeWave takes the form of a software program that generates a wave graph plotting a timeline over 4000 years in duration.The TimeWave can be mapped using 5 different number sets. Certain versions are said to be more ” mathematically sound” than others. They all end on the same date of Dec 21, 2012, but the wave patterns of peaks and valleys vary among the different versions.Wednesday, 14 September 2011

Web Spider

Tuesday, 13 September 2011

Web Bot Project Predictions and 2012

The earliest and most eerie of the big predictions came in June of 2001.The Web Bot program indicated that a life major life-changing event would take place within the next few months. Based on the web chatter picked up by the Web Bot they concluded that a major event will take place soon. Unfortunately, the Web Bots proved to be prophetic as the World Trade Center and the Pentagon was attacked on September 11th, 2001.

According to the Web Bot Project, the Web Bot predicts major calamitous events to unfold in 2012!

Credits to:

http://2012supplies.com/what_is_2012/web_bot_2012.html

Thursday, 1 September 2011

Type of web bot: crawler